Yes, you should hire college-educated computer scientists

Daniel Gelernter, CEO of Dittach, has a WSJ op-ed titled "Why I’m Not Looking to Hire Computer-Science Majors":

The thing I look for in a developer is a longtime love of coding -- people who taught themselves to code in high school and still can't get enough of it...

The thing I don't look for in a developer is a degree in computer science. University computer science departments are in miserable shape: 10 years behind in a field that changes every 10 minutes. Computer science departments prepare their students for academic or research careers and spurn jobs that actually pay money. They teach students how to design an operating system, but not how to work with a real, live development team.

There isn't a single course in iPhone or Android development in the computer science departments of Yale or Princeton. Harvard has one, but you can’t make a good developer in one term. So if a college graduate has the coding skills that tech startups need, he most likely learned them on his own, in between problem sets. As one of my developers told me: "The people who were good at the school part of computer science -- just weren’t good developers." My experience in hiring shows exactly that. (...)

Now, full disclosure: I'm a computer science major, and Harvard's course in iPhone development is taught by my current boss. Then again, I've had a longtime love of coding, taught myself to code in high middle school and still can't get enough of it, and so on. But I still have so many things to say about this, which I hope aren't too biased by my individual perspective.

(1)

It may be the case that most university computer science departments across the US are "in miserable shape", that they're "10 years behind in a field that changes every 10 minutes", that their graduates are ill-equipped for "jobs that actually pay money", &c. Maybe Gelernter knows better than I do, given his "experience in hiring". But he name-drops my school, so I think I've got grounds to comment here.

I have no idea from whence he gets "They teach students how to design an operating system, but not how to work with a real, live development team." Harvard's very first course for computer science majors -- for which I'm a teaching fellow this semester -- culminates in a team-based project. I don't think I've literally ever had an individual project in the computer science department. (edit: I did an individual project for Data Visualization, but I had to secure special permission, since it was against class policy.) I learned how to design an operating system...in a team, where maintaining and extending code other people wrote was the not-so-secret focus of the course.

As for "10 years behind in a field that changes every 10 minutes", I quote my Operating Systems professor, Margo Seltzer:

If you educate a student well, they should be able to learn how to develop in whatever lanugage and environment you're using. Criticizing programs because they don't specifically teach iOS or Android is like saying, "You learned to swim in a pond? Why that means you can't possibly swim well in a pool, lake, or ocean."

Six months into a job, I'll bet on any of our graduates compared to anyone out of a program that tries to teach specific tools and environments over how to think, how systems work, why software is designed how it is, etc. Sure I make my students write an OS -- they learn a ton about concurrency, synchronization, resource management -- try building scalable software without those skills/knowledge. (private post, quoted with permission)

From the comments on her post:

Ten years ago he'd be complaining that we weren't teaching Win32 development, and what's this useless Java thing? If anything, we're teaching ten years ahead of industry.

Ore Babarinsa, who has at times appeared on this blog:

His example of iOS development shows just how shallow this position is. Apple is completely dropping Objective C as their marquee development platform for iOS in favor of swift, so quite literally anyone who spent the last 4 years learning iOS programming (which, since the advent of reference counting in iOS 5 has already changed massively), had all of their skills invalidated as a result of one single WWDC.

Especially given how easy it is for someone with a background in programming language theory to pick up a language and API, and just how quickly languages and APIs change these days, I just don't see how any of this pans out positively for Mr. Gelernter.

To summarize: If the field changes every ten minutes, should students learn 350 different frameworks in a standard 60-credit degree program? How, logistically, does Gelernter propose that his developers adapt to new change every 10 minutes when "you can't make a good developer in one term", even with a dedicated course?

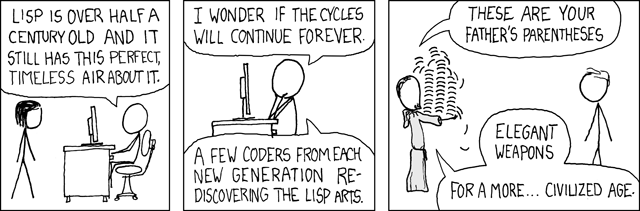

Maybe it doesn't matter so much, then, if computer science majors are trained in the venerable tools of yesteryear, rather than the ad-hoc frameworks of today? If they're going deal with an estimated half-million new paradigms in your career as a software developer, maybe it's more important to understand where their shared intellectual DNA came from in the first place -- namely, dinosaurs like assembly and C, in 'useless' topics like operating systems and compiler design, and in challenges that are glossed over -- sometimes entirely -- in modern tools, such as resource management, concurrency, and synchronization.

(2)

The second enormous problem in Gelernter's op-ed is a touch more subtle, but in many ways, even more noxious:

The thing I look for in a developer is a longtime love of coding -- people who taught themselves to code in high school and still can't get enough of it. (...)

In 2013, no female, African American, or Hispanic students in Mississippi or Montana took the Advanced Placement exam in computer science, according to statistics released by the College Board and compiled by Barbara Ericson, of Georgia Tech. Further:

In fact, no African-American students took the exam in a total of 11 states, and no Hispanic students took it in eight states...

The College Board, which oversees AP, notes on its website that in 2013 about 30,000 students total took the AP exam for computer science, a course in which students learn to design and create computer programs. Less than 20 percent of those students were female, about 3 percent were African American, and 8 percent were Hispanic (combined totals of Mexican American, Puerto Rican, and other Hispanic).

For comparison, among computer science majors at Stanford, 30.3% were female, 6.1% were black, and 9.5% were Hispanic, found one student's informal study. A similar study by Winnie Wu of Harvard found 27% female, 3% black, and 5% Hispanic. No, I am not proud of my school in this regard, though it does blow Google and Facebook both completely out of the water -- their technical staff are 18% / 16% female, 1% / 1% black, and 3% / 2% Hispanic, respectively.

Nevertheless, speaking in terms of general trends:

- Among computer science majors, women are disproportionately likely to have matriculated to college intending to study some other subject.

- Among computer science majors, underrepresented racial minorities are disproportionately likely to have little-to-no prior experience in computer science.

- If you only want to hire programmers who taught themselves to code, you're going to be hiring a lot of socioeconomically advantaged, white and/or Asian men.

So...what's the gender ratio like at Dittach?

...each developer sees his work changing the product on a daily basis...

...if a college graduate has the coding skills that tech startups need, he most likely learned them on his own...

I hate to speculate uncharitably, but using male pronouns for your developers by default isn't a good look. I don't have actual data here, but I'm willing to guess that Digitech's strategy of "hire all the high school whizzes" isn't doing a lot of good for diversity among their developers.

(3)

There's a pernicious message just below the surface of this, the repackaging of an old, tired refrain: If you're not a real [X] person, don't bother -- you won't make it.

I see a great deal of this attitude in the three Harvard departments I'm most intimately familiar with -- computer science, mathematics, and physics -- with the same effect in each: a constant winnowing out of the less-confident, those worried by imposter syndrome and those prone to sterotype threat. While it may mean something that a student from a privileged background didn't bother to explore of certain opportunities before college (e.g. to teach themselves to code, to pursue advanced coursework in mathematics), it means very little that a student from a less-privileged background did not do so, if they simply never had the chance.

But of course, try explaining that to the subconscious mind of the student feeling very out-of-place in the subject that they love, because, not only does everyone else look different than them, everyone else (it seems) is a real [X] person, and they're not. Even if the world does, in fact, mostly divide into real [X] people and "not-real" [X] people, those with backgrounds and positions of privilege are more likely to over-confidently assume that they're in the former group, while those with backgrounds and positions of less privilege will be too quick to assume that they're actually of the latter. The cultures of communities are made up of the small, shared assumptions we make, and this particular framing of the world is one with real teeth.

(4)

"But Ross!" one interrupts, "All that stuff about disproportions and privilege and selectively leaky pipelines might be true, but I'm at a startup, and I still want to hire only the best developers. And while I know that it's not a requirement that they have taught themselves to program at age twelve, you can't deny the statistical fact that that group has more excellent programmers than college majors who have only been at it for four years!"

And yes, sure. It may be easier to hire a technically skilled programmer out of that group than any other. But that's not all that you're looking for.

Programmers who started when they were twelve are used to doing things on their own. The majority of projects that they've worked on have been theirs, from start to finish. They're certainly not used to working in groups.

And the students who weren't teaching themselves to code in highschool weren't doing nothing in that time -- they had other interests, other hobbies, and are able to bring those to your development team. When you're producing, say, an app for organizing email attachments, it actually makes a great deal of sense to actively search for people who think differently, who once "weren't computer people", or used email solely to communicate with friends, or only used it very sparingly for a long time.

What you don't want is a team of developers who were always power-users, who can't remember what it's like to only-kinda understand different filetypes, or what reply-all is, or what have you. You want, as much as you can get, developers who are diverse, who can bring different perspectives, and who look like your customer base. You don't get that by only hiring "real" computer scientists.

(5)

I won't address it here, because this post is delayed enough, but Stephen Brennan has a fine response to Gelernter on his own blog. I agree with Brennan on some things, and believe that he's missing the mark on other things, but I certainly invite you to form your own opinion on his response.

addendum: This talk by Eric Meyer is an incredible explanation of some of the ways in which diversity of experience holds immense and underappreciated value in designing technical systems. Seriously, I cannot recommend it enough for anyone who has plans of ever working on front-end software development.