Metaculus has some issues

In Zvi's 2/11 Covid update, he turned to Metaculus for help. He looked at the numbers. Becase the man is an inveterate trader, he saw odds that were Wrong On The Internet and just couldn't stop himself from creating an account to bet against it. And then he saw the payout structure and decided he was done after making a single prediction.

I spent some time with the Metaculus site and figured out how they borked this one up enough to drive away Zvi Mowshowitz. I'll try to explain it here.

(1)

Here's a presumably current description of the scoring function that I found on the FAQ, slightly abridged:

Your score \(S(T;f)\) at any given time \(T\) is the sum of an "absolute" component and a "relative" component: \[S(T;f)=a(N)\times L(p;f)+b(N)\times B(p;f),\] where \(N\) is the number of predictors on the question.

If we define \(f=1\) for a positive resolution of the question and \(f=0\) for a negative resolution, then \(L(p;f)=\log_2(p/0.5)\) for \(f=1\) and \(L(p;f)=\log_2((1−p)/0.5)\) for \(f=0\). The normalizations \(a(N)=30+10\log_2(1+N/30)\) and \(b(N)=20\log_2(1+N/30)\) depend on \(N\) only.

The "betting score" \(-2\lt B(p;f)\lt 2\) represents a bet placed against every other predictor. This is described under "constant pool" scoring on the Metaculus scoring demo (...)

I'll try to describe that in friendlier terms.

When you make a prediction with \(30n-30=N\) other people, you put your internet points behind two different bets (which get multiplied by 10 to get Metaculus points, but let's skip that for now):

- You bet \(3+log_2(n)\) times against the house, with a log scoring rule. (Your logarithmic score always has negative expected value; the house always wins in EV.)

- You bet \(2\times log_2(n)\) times against "the community predictions" which means you look at each other prediction and bet into it at its odds(!) for \(1/N\) size. (No one bets into you at your odds, I think because of some issue with proper scoring rules.)

When \(n=8\) (~200 people) the bets are of equal size, but when \(n=128\) (~4000 people), the second bet is 150% the size of the first.

Also notice that if you bet equal to the median community prediction, the second bet is a zero-exposure arbitrage that makes you a profit equal to half of the difference between [the average prediction of the bullish half] and [the average prediction of the bearish half].

Let's work an example.

Rounding some of the numbers from above, let's say that 2000 people predict 30% and 2000 people predict 70%. Then each user is making a size=12 bet against the house, and will win 4.8 if it settles in their favor, losing 7.4 if it settles against them. (These bets are negative-sum for users.)

But each user is also making a size=18 one-sided against "community predictions", which is a bet where one half either pays 8.3 in their favor / loses 3.55 against, and the other half is a scratch.

Now say that the event is just over 56% to happen, and let's see what happens to those people who predicted 30%:

- Their bets against the house are losing 2.03 in expectancy.

- Their bets against the "community" are winning 1.66 in EV.

- With 18 community bets and 12 house bets, the 30% predictors end up profiting an average of 5.57 in overall EV.

(The 70% predictors are up 48.7 points in EV.)

(2)

This is a bit busted -- but it's not really mathematically busted or theoretically busted. I do believe the proof on the page about the scoring system that shows it's a proper scoring rule -- so that if you knew that the event was 56% to happen, then 56% is your best bet. Sure. But if the system gives you +5.5 internet points for betting 30% into a market that's split 50/50 between 30% and 70% and making that consensus objectively worse, then what should we expect to happen?

All taken together, I take this as evidence that proper scoring rules are not sufficient to optimally incentivize information aggregation. The proof goes like this:

- Metaculus's scoring rule is a proper scoring rule.

- Metaculus's scoring rule incentivizes behavior different from "inject useful information into the system".

- Therefore, proper scoring rules do not necessarily guarantee that participants are incentivized to inject useful information into the system.

Consider a hypothetical platform called Alephculus, which posts yes-or-no questions about tomorrow's weather and lets users predict probabilities. Users get points according to some strictly proper, weakly positive scoring rule -- for any question, you'll score the most points by submitting your true guess of the probability, and the worst you can do is 0 points. Also, if the formulation of the question is found to have sufficient Kabbalistic significance, then the points are multiplied by one million.

From the perspective of a weather-prediction-synthesis platform, users' points scores might...leave something to be desired. But (assuming people cared about Alephculus points) then this might be a fine way to incentivize decentralized research into the Kabbalistic significance of your prediction questions! Presumably it's not hard to get some reasonable guess about the weather, but figuring out which are the high-value questions is a big deal...

The scoring rule for each "market" is still proper, but the meta-scoring rule for the Alephculus "marketplace" is incentivizing Kabbalistic study, rather than research on the weather. Alas.

(3)

Metaculus isn't as borked as Alephculus, but it has the same kind of problem at its core. Once participants are confronted with the meta-game of choosing where to spend limited resources like time and attention, proper scoring rules don't keep participants from hunting for the cheapest points per unit effort.

What would that look like in Metaculus? I'm not sure yet, but I can speculate.

First, the relative balance of "house" and "community" bet payoffs gives a clear incentive to focus on questions that have many other predictors (or will have many other predictors in the near future). In the limit, if it's just you and the house, why bother? On the other hand, if there are many other people -- especially many other people split between a "low" camp and a "high" camp -- there's a lot more opportunity in getting off a big "community" bet, even if you're doing something relatively dumb.

Actually, we can take "doing something relatively dumb" to the limit, and ask: What predictions can we make for great justice internet points without adding any useful information whatsoever?

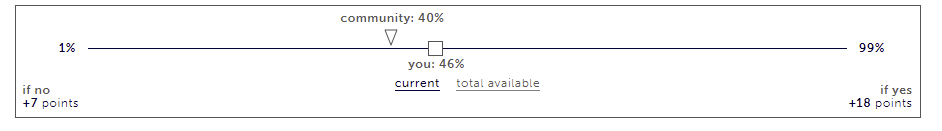

We could start by just predicting the community median -- which minimaxes the worst-case profits we make in our community bet. Or even better, we could just do the math and find the prediction that will make us indifferent between a "yes" and "no" resolution when considering both the "house" and "community" bets. (Or, if that's too much math we could just drag the slider until the helpful indicators under "if no" and "if yes" are equal.) Since we don't have any exposure to the actual question, the only thing we need to know is "is my risk-free payout positive?" -- and, if so, lock in the prediction.

I don't know what the correct answer is, but there's free internet points for predicting 42%, so let's do that and move on. (h/t Zvi, of course)

It would be A Real Shame if someone were to widely disseminate a simple-to-use open-source tool for identifying Metaculus questions where the free-points arbitrage set up, so that users could, um, make predictions on them. It would be especially effective unfortunate notable because, unlike in prediction markets, the arbitrage doesn't go away when more people do it. On the contrary, more people piling in increases the weight of the "community" bet relative to the "house" bet, making it better for the other people doing likewise. Really would be a shame if someone wrote that open-source tool, yessiree.

(4)

I don't have a completely satisfying solution for the problem yet, but if the good folks at Metaculus think that this critique has teeth and are interested in doing something about it, I'd be happy to work with them on understanding and improving things.

Speaking generally, though, I have a pretty strong suspicion that the root of the problem lies somewhere here:

If we were only asking our players to bet for or against an event happening, then our scoring would be straightforward: we'd set up something like a prediction market with payout proportional to the ratio of people making the opposite bet. Instead, we want our players to predict on the probability of an outcome, not the outcome itself. (...)

I am not convinced that the distinction between "predicting the probability of an outcome" and "predicting the outcome" makes sense in context. In a prediction market, participants trade contracts that settle to 100¢ if "yes" happens and 0¢ if "no" happens, and they trade them for some number of ¢ between 0 and 100. If the highest bid to buy contracts is 19¢ and the lowest offer to sell contracts is at 21¢, then the market is suggesting a probability of 20%. Maybe it's just wildly wrong! But the market, as an aggregate meta-organism, is producing a market-clearing probability out of individual agents' binary bets.

More to the point, if you show up to a prediction market, and you see the contract in question 19¢ bid, 21¢ offered, you can't just make a binary prediction; you have to predict a probability and then decide to buy (because you think it's >21%) or sell (because you think it's <19%) or neither (because you think it's approximately fair). This is a proper scoring rule! The way to maximize your expected profits is to do all of the trades that look good to your belief about the probability!

But also, "trade if the market disagrees with your belief" is the proper scoring rule that is used to decide the allocation of literally trillions of dollars of resources in real life. If it has bad exploits, then we, as a society, almost certainly already know about them. And we know that markets allow a richness that prediction-aggregation doesn't have -- the possibility of doing multiple trades lets agents express their "second-order" knowledge (how strongly they believe what they believe), or "third-order" knowledge (how they think their second-order knowledge compares to others'), and so on, in a way that one-shot proper scoring rules simply cannot hope to match.

edit: Later discussion suggested that this section could be misinterpreted as a suggestion that Metaculus adopt real-money stakes. To be clear, I'm

Taking a step backwards, I think Metaculus's first-order focus on individual participants' probabilities (rather than taking their actions as inputs into an overall aggregation) leads pretty directly to the flaw that creates these no-information arbitrage opportunities in the first place.

Because the system treats participants' probabilities as first-order objects (and tries to reward the worthiest ones), it's rewarding agents who look at a market split 50/50 between 30% and 70% and say "I predict 50%". (In fact, any time that 50% is the median, you will always get positive points for predicting 50%!)

Sure, in some sense, you're adding information to the market by looking at the split 30%s and 70%s and counting them up and placing your weight on the 50% that they imply. If it's actually, truly 50% to happen, then you are more right than anyone in the 30% camp or the 70% camp, and you should probably get a gold star for your Bayescraft.

But, from another perspective, you're not really adding anything to the market by speaking up to say "50%". Anyone looking at the 30%/70% market could see that the natural aggregate prediction is 50%, and you haven't improved on that at all. You may have seen the light of Bayes, but you are not really helping here, and you should practice your Bayescraft somewhere else, the ideal social planner might say.

But this is not Project Cybersyn, and there's no social planner to tell you to move along, so we need to incentivize that in the point system somehow -- or else we'll just keep incentivizing people to tell us things we could already have guessed for ourselves.

Okay, the Cybersyn OpsRoom is cool, but seriously have you seen the workstations on a modern prop trading desk?